STM Blog

Vaccine mandates misinformation: 2 experts explain the true role of slavery and racism in the history of public health policy – and the growing threat ignorance poses today

Vaccine mandates misinformation: Florida’s vaccination rates decline as the state plans to eliminate mandates. Experts warn this could deepen health disparities, undermine public trust, and threaten community health, especially given the history of racism in vaccination practices.

Vaccine mandates misinformation:

Lauren MacIvor Thompson, Kennesaw State University and Stacie Kershner, Georgia State University

On Sept. 3, 2025, Florida announced its plans to be the first state to eliminate vaccine mandates for its citizens, including those for children to attend school.

Current Florida law and the state’s Department of Health require that children who attend day care or public school be immunized for polio, diphtheria, rubeola, rubella, pertussis and other communicable diseases. Dr. Joseph Ladapo, Florida’s surgeon general and a professor of medicine at the University of Florida, has stated that “every last one” of these decades-old vaccine requirements “is wrong and drips with disdain and slavery.”

As experts on the history of American medicine and vaccine law and policy, we took immediate note of Ladapo’s use of the word “slavery.”

There is certainly a complicated history of race and vaccines in the United States. But, in our view, invoking slavery as a way to justify the elimination of vaccines and vaccine mandates will accelerate mistrust and present a major threat to public health, especially given existing racial health disparities. It also erases Black Americans’ key work in centuries of American public health initiatives, including vaccination campaigns.

What’s clear: Vaccines and mandates save human lives

Evidence and data show that vaccines work, as do mandates, in keeping Americans healthy. The World Health Organization reported in a landmark 2024 study that vaccines have saved more than 154 million lives globally in just the past 50 years.

In the United States, vaccines for children are one of the top public health achievements of the 20th century. Rates of eight of the most common vaccine-preventable diseases in school-age children dropped by 97% or more from pre-vaccine levels, preventing an estimated 1,129,000 deaths and resulting in direct savings of US$540 billion and societal savings of $2.7 trillion.

History of vaccine mandates in the United States

Vaccine mandates in the United States date to the Colonial period and have a complex history. George Washington required his troops be inoculated, the predecessor of vaccination, against smallpox during the American Revolution.

To prevent outbreaks of this debilitating, disfiguring and deadly disease, state and local governments implemented smallpox inoculation and vaccination campaigns into the early 1900s. They targeted various groups, including enslaved people, immigrants, people living in tenement and other crowded housing conditions, manual laborers and others, forcibly vaccinating those who could not provide proof of prior vaccination.

Although religious exemptions were not recognized by law until the 1960s, some resisted these vaccination campaigns from the beginning, and 19th-century anti-vaccination societies urged the rollback of state laws requiring vaccination.

By the turn of the 20th century, however, the U.S. Supreme Court also began to intervene in matters of public health and vaccination. The court ultimately upheld vaccine mandates in Jacobson v. Massachusetts in 1905, in an effort to strike a balance between individual rights with the need to protect the public’s health. In Zucht v. King in 1922, the court also ruled in favor of vaccine mandates, this time for school attendance.

Vaccine mandates expanded by the middle of the 20th century to include vaccines for many dangerous childhood diseases, such as polio, measles, rubella and pertussis. When Jonas Salk’s polio vaccine became available, families waited in long lines for hours to receive it, hoping to prevent their children from having to experience paralysis or life in an iron lung.

Scientific studies in the 1970s demonstrated that state declines in measles cases were correlated with enforcement of school vaccine mandates. The federal Childhood Immunization Initiative launched in the late 1970s helped educate the public on the importance of vaccines and encouraged enforcement. All states had mandatory vaccine requirements for public school entry by 1980, and data over the past several decades continues to demonstrate the importance of these laws for public health.

Most parents also continue to support school mandates. A survey conducted in July and August 2025 by The Washington Post and the Kaiser Family Foundation finds that 81% of parents support laws requiring vaccines for school.

Black Americans’ long fight for public health equity

Despite the proven success of vaccines and the importance of vaccine mandates in maintaining high vaccination rates, there is a vocal anti-vaccine minority in the U.S. that has gained traction since the COVID-19 pandemic.

Misinformation proliferates both online and off. Some of the misinformation originates in the historical realities of vaccines and social policy in the United States.

When Ladapo, the Florida surgeon general, invoked the term “slavery” to refer to vaccine mandates, he may have been referring to the history of racism in the medical field, such as the U.S. Public Health Service Untreated Syphilis Study at Tuskegee. The study, which started in 1932 and spanned four decades, involved hundreds of Black men who were recruited without their knowledge or consent so that researchers could study the effects of untreated syphilis. Investigators misled the participants about the nature of the study and actively withheld treatment – including penicillin, which became the standard therapy in the late 1940s – in order to study the effects of untreated syphilis on the men’s bodies.

Today, the study is remembered as one of the most egregious instances of racism and unethical experimentation in American medicine. Its participants had enrolled in the study because it was advertised as a chance to receive expert medical care but, instead, were subjected to lies and painful “treatments.”

Despite these experiences in the medical system, Black Americans have long advocated for better health care, connecting it to the larger struggle for racial equality.

Vaccination is no exception. Despite the fact that they were often the subject of forced innoculation, enslaved people helped to lead the first American public health initiatives around epidemic disease. Historians’ research on smallpox and slavery, for example, has found that inoculation was widely accepted and practiced by West Africans by the early 1700s, and that enslaved people brought the practice to the Colonies.

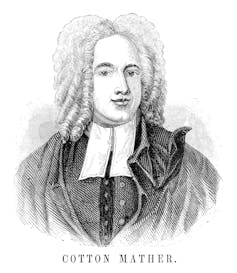

Although his role is often downplayed, an African man known as Onesimus introduced his enslaver Cotton Mather to inoculation.

Throughout the next century, enslaved people often continued to inoculate each other to prevent smallpox outbreaks, and enslaved and free people of African descent played critical roles in keeping their own communities as healthy as possible in the face of violence, racism and brutality. The modern Civil Rights Movement explicitly drew on this history and centered health equity for Black Americans as one of its key tenets, including working to provide access to vaccines for preventable diseases.

In our view, Ladapo’s reference to vaccines as “slavery” ignores this important and nuanced history, especially Black Americans’ role in the history of preventing communicable disease with vaccines.

Lessons to learn from Tuskegee

Ladapo’s word choice also runs the risk of perpetuating the rightful mistrust that continues to exist in communities of color about vaccines and the American health system more broadly. Studies show that lingering effects of Tuskegee and other instances of medical racism have had real consequences for the health and vaccination rates of Black Americans.

A large body of evidence shows the existence of persistent health disparities for Black people in the United States compared with their white counterparts, leading to shorter lifespans, higher rates of maternal and infant mortality and higher rates of communicable and chronic diseases, with worse outcomes.

Eliminating vaccine mandates in Florida and expanding exemptions in other states will continue to widen these already existing disparities that stem from past public health wrongs.

There is an opportunity here, however, for health officials, not just in Florida but across the nation, to work together to learn from the past in making American public health better for everyone.

Rather than weakening vaccine mandates, national, state and local public health guidance can focus on expanding access and communicating trustworthy information about vaccines for all Americans. Policymakers can acknowledge the complicated history of vaccines, public health and race, while also recognizing how advancements in science and medicine have given us the opportunity to eradicate many of these diseases in the United States today.

Lauren MacIvor Thompson, Assistant Professor of History and Interdisciplinary Studies, Kennesaw State University and Stacie Kershner, Deputy Director of the Center for Law, Health & Society, Georgia State University

This article is republished from The Conversation under a Creative Commons license. Read the original article.

The Bridge is a section of the STM Daily News Blog meant for diversity, offering real news stories about bona fide community efforts to perpetuate a greater good. The purpose of The Bridge is to connect the divides that separate us, fostering understanding and empathy among different groups. By highlighting positive initiatives and inspirational actions, The Bridge aims to create a sense of unity and shared purpose. This section brings to light stories of individuals and organizations working tirelessly to promote inclusivity, equality, and mutual respect. Through these narratives, readers are encouraged to appreciate the richness of diverse perspectives and to participate actively in building stronger, more cohesive communities.

https://stmdailynews.com/the-bridge

Discover more from Daily News

Subscribe to get the latest posts sent to your email.

The Long Track Back

Why Downtown Los Angeles Feels Small Compared to Other Cities

Downtown Los Angeles often feels “small” compared to other U.S. cities, but that’s only part of the story. With some of the tallest buildings west of the Mississippi and skyline clusters spread across the region, LA’s downtown reflects the city’s unique polycentric identity—one that, if combined, could form a true mega downtown.

Last Updated on February 18, 2026 by Daily News Staff

Panorama of Los Angeles from Mount Hollywood – California, United States

When people think of major American cities, they often imagine a bustling, concentrated downtown core filled with skyscrapers. New York has Manhattan, Chicago has the Loop, San Francisco has its Financial District. Los Angeles, by contrast, often leaves visitors surprised: “Is this really downtown?”

The answer is yes—and no.

Downtown LA in Context

Compared to other major cities, Downtown Los Angeles (DTLA) is relatively small as a central business district. For much of the 20th century, strict height restrictions capped most buildings under 150 feet, while cities like Chicago and New York were erecting early skyscrapers. LA’s skyline didn’t really begin to climb until the late 1960s.

But history alone doesn’t explain why DTLA feels different. The real story lies in how Los Angeles grew: not as one unified city center, but as a collection of many hubs.

![]()

Downtown Los Angeles

A Polycentric City

Los Angeles is famously decentralized. Hollywood developed around the film industry. Century City rose on former studio land as a business hub. Burbank became a studio and aerospace center. Long Beach grew around the port. The Wilshire Corridor filled with office towers and condos.

Unlike other cities where downtown is the place for work, culture, and finance, Los Angeles spread its energy outward. Freeways and car culture made it easy for businesses and residents to operate outside of downtown. The result is a polycentric metropolis, with multiple “downtowns” rather than one dominant core.

A Resident’s Perspective

As someone who lived in Los Angeles for 28 years, I see DTLA differently. While some outsiders describe it as “small,” the reality is that Downtown Los Angeles is still significant. It has some of the tallest buildings west of the Mississippi River, including the Wilshire Grand Center and the U.S. Bank Tower. Over the last two decades, adaptive reuse projects have transformed old office buildings into lofts, while developments like LA Live, Crypto.com Arena, and the Broad Museum have revitalized the area.

In other words, DTLA is large enough—it just plays a different role than downtowns in other American cities.

View of Westwood, Century City, Beverly Hills, and the Wilshire Corridor.

The “Mega Downtown” That Isn’t

A friend once put it to me with a bit of imagination: “If you could magically pick up all of LA’s skyline clusters—Downtown, Century City, Hollywood, the Wilshire Corridor—and drop them together in one spot, you’d have a mega downtown.”

He’s right. Los Angeles doesn’t lack tall buildings or urban energy—it just spreads them out over a vast area, reflecting the city’s unique history, geography, and culture.

A Downtown That Fits Its City

So, is Downtown LA “small”? Compared to Manhattan or Chicago’s Loop, yes. But judged on its own terms, DTLA is a vibrant hub within a much larger, decentralized metropolis. It’s a downtown that reflects Los Angeles itself: sprawling, diverse, and impossible to fit neatly into the mold of other American cities.

🔗 Related Links

Dive into “The Knowledge,” where curiosity meets clarity. This playlist, in collaboration with STMDailyNews.com, is designed for viewers who value historical accuracy and insightful learning. Our short videos, ranging from 30 seconds to a minute and a half, make complex subjects easy to grasp in no time. Covering everything from historical events to contemporary processes and entertainment, “The Knowledge” bridges the past with the present. In a world where information is abundant yet often misused, our series aims to guide you through the noise, preserving vital knowledge and truths that shape our lives today. Perfect for curious minds eager to discover the ‘why’ and ‘how’ of everything around us. Subscribe and join in as we explore the facts that matter. https://stmdailynews.com/the-knowledge/

Discover more from Daily News

Subscribe to get the latest posts sent to your email.

The Knowledge

How a 22-year-old George Washington learned how to lead, from a series of mistakes in the Pennsylvania wilderness

This Presidents Day, I’ve been thinking about George Washington − not at his finest hour, but possibly at his worst.

Christopher Magra, University of Tennessee

This Presidents Day, I’ve been thinking about George Washington − not at his finest hour, but possibly at his worst.

In 1754, a 22-year-old Washington marched into the wilderness surrounding Pittsburgh with more ambition than sense. He volunteered to travel to the Ohio Valley on a mission to deliver a letter from Robert Dinwiddie, governor of Virginia, to the commander of French troops in the Ohio territory. This military mission sparked an international war, cost him his first command and taught him lessons that would shape the American Revolution.

As a professor of early American history who has written two books on the American Revolution, I’ve learned that Washington’s time spent in the Fort Duquesne area taught him valuable lessons about frontier warfare, international diplomacy and personal resilience.

The mission to expel the French

In 1753, Dinwiddie decided to expel French fur trappers and military forces from the strategic confluence of three mighty waterways that crisscrossed the interior of the continent: the Allegheny, Monongahela and Ohio rivers. This confluence is where downtown Pittsburgh now stands, but at the time it was wilderness.

King George II authorized Dinwiddie to use force, if necessary, to secure lands that Virginia was claiming as its own.

As a major in the Virginia provincial militia, Washington wanted the assignment to deliver Dinwiddie’s demand that the French retreat. He believe the assignment would secure him a British army commission.

Washington received his marching orders on Oct. 31, 1753. He traveled to Fort Le Boeuf in northwestern Pennsylvania and returned a month later with a polite but firm “no” from the French.

Dinwiddie promoted Washington from major to lieutenant colonel and ordered him to return to the Ohio River Valley in April 1754 with 160 men. Washington quickly learned that French forces of about 500 men had already constructed the formidable Fort Duquesne at the forks of the Ohio. It was at this point that he faced his first major test as a military leader. Instead of falling back to gather more substantial reinforcements, he pushed forward. This decision reflected an aggressive, perhaps naive, brand of leadership characterized by a desire for action over caution.

Washington’s initial confidence was high. He famously wrote to his brother that there was “something charming” in the sound of whistling bullets.

The Jumonville affair and an international crisis

Perhaps the most controversial moment of Washington’s early leadership occurred on May 28, 1754, about 40 miles south of Fort Duquesne. Guided by the Seneca leader Tanacharison – known as the “Half King” – and 12 Seneca warriors, Washington and his detachment of 40 militiamen ambushed a party of 35 French Canadian militiamen led by Ensign Joseph Coulon de Jumonville. The Jumonville affair lasted only 15 minutes, but its repercussions were global.

Ten of the French, including Jumonville, were killed. Washington’s inability to control his Native American allies – the Seneca warriors executed Jumonville – exposed a critical gap in his early leadership. He lacked the ability to manage the volatile intercultural alliances necessary for frontier warfare.

Washington also allowed one enemy soldier to escape to warn Fort Duquesne. This skirmish effectively ignited the French and Indian War, and Washington found himself at the center of a burgeoning international crisis.

Defeat at Fort Necessity

Washington then made the fateful decision to dig in and call for reinforcements instead of retreating in the face of inevitable French retaliation. Reinforcements arrived: 200 Virginia militiamen and 100 British regulars. They brought news from Dinwiddie: congratulations on Washington’s victory and his promotion to colonel.

His inexperience showed in his design of Fort Necessity. He positioned the small, circular palisade in a meadow depression, where surrounding wooded high ground allowed enemy marksmen to fire down with impunity. Worse still, Tanacharison, disillusioned with Washington’s leadership and the British failure to follow through with promised support, had already departed with his warriors weeks earlier. When the French and their Native American allies finally attacked on July 3, heavy rains flooded the shallow trenches, soaking gunpowder and leaving Washington’s men vulnerable inside their poorly designed fortification.

The battle of Fort Necessity was a grueling, daylong engagement in the mud and rain. Approximately 700 French and Native American allies surrounded the combined force of 460 Virginian militiamen and British regulars. Despite being outnumbered and outmaneuvered, Washington maintained order among his demoralized troops. When French commander Louis Coulon de Villiers – Jumonville’s brother – offered a truce, Washington faced the most humbling moment of his young life: the necessity of surrender. His decision to capitulate was a pragmatic act of leadership that prioritized the survival of his men over personal honor.

The surrender also included a stinging lesson in the nuances of diplomacy. Because Washington could not read French, he signed a document that used the word “l’assassinat,” which translates to “assassination,” to describe Jumonville’s death. This inadvertent admission that he had ordered the assassination of a French diplomat became propaganda for the French, teaching Washington the vital importance of optics in international relations.

Lessons that forged a leader

The 1754 campaign ended in a full retreat to Virginia, and Washington resigned his commission shortly thereafter. Yet, this period was essential in transforming Washington from a man seeking personal glory into one who understood the weight of responsibility.

He learned that leadership required more than courage – it demanded understanding of terrain, cultural awareness of allies and enemies, and political acumen. The strategic importance of the Ohio River Valley, a gateway to the continental interior and vast fur-trading networks, made these lessons all the more significant.

Ultimately, the hard lessons Washington learned at the threshold of Fort Duquesne in 1754 provided the foundational experience for his later role as commander in chief of the Continental Army. The decisions he made in Pennsylvania and the Ohio wilderness, including the impulsive attack, the poor choice of defensive ground and the diplomatic oversight, were the very errors he would spend the rest of his military career correcting.

Though he did not capture Fort Duquesne in 1754, the young George Washington left the woods of Pennsylvania with a far more valuable prize: the tempered, resilient spirit of a leader who had learned from his mistakes.

Christopher Magra, Professor of American History, University of Tennessee

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Dive into “The Knowledge,” where curiosity meets clarity. This playlist, in collaboration with STMDailyNews.com, is designed for viewers who value historical accuracy and insightful learning. Our short videos, ranging from 30 seconds to a minute and a half, make complex subjects easy to grasp in no time. Covering everything from historical events to contemporary processes and entertainment, “The Knowledge” bridges the past with the present. In a world where information is abundant yet often misused, our series aims to guide you through the noise, preserving vital knowledge and truths that shape our lives today. Perfect for curious minds eager to discover the ‘why’ and ‘how’ of everything around us. Subscribe and join in as we explore the facts that matter. https://stmdailynews.com/the-knowledge/

Discover more from Daily News

Subscribe to get the latest posts sent to your email.

Urbanism

The Building That Proved Los Angeles Could Go Vertical

Los Angeles once banned skyscrapers, yet City Hall broke the height limit and proved high-rise buildings could be engineered safely in an earthquake zone.

How City Hall Quietly Undermined LA’s Own Height Limits

The Knowledge Series | STM Daily News

For more than half a century, Los Angeles enforced one of the strictest building height limits in the United States. Beginning in 1905, most buildings were capped at 150 feet, shaping a city that grew outward rather than upward.

The goal was clear: avoid the congestion, shadows, and fire dangers associated with dense Eastern cities. Los Angeles sold itself as open, sunlit, and horizontal — a place where growth spread across land, not into the sky.

And yet, in 1928, Los Angeles City Hall rose to 454 feet, towering over the city like a contradiction in concrete.

It wasn’t built to spark a commercial skyscraper boom.

But it ended up proving that Los Angeles could safely build one.

A Rule Designed to Prevent a Manhattan-Style City

The original height restriction was rooted in early 20th-century fears:

- Limited firefighting capabilities

- Concerns over blocked sunlight and airflow

- Anxiety about congestion and overcrowding

- A strong desire not to resemble New York or Chicago

Los Angeles wanted prosperity — just not vertical density.

The height cap reinforced a development model where:

- Office districts stayed low-rise

- Growth moved outward

- Automobiles became essential

- Downtown never consolidated into a dense core

This philosophy held firm even as other American cities raced upward.

Why City Hall Was Never Meant to Change the Rules

City Hall was intentionally exempt from the height limit because the law applied primarily to private commercial buildings, not civic monuments.

But city leaders were explicit about one thing:

City Hall was not a precedent.

It was designed to:

- Serve as a symbolic seat of government

- Stand alone as a civic landmark

- Represent stability, authority, and modern governance

- Avoid competing with private office buildings

In effect, Los Angeles wanted a skyline icon — without a skyline.

Innovation Hidden in Plain Sight

What made City Hall truly significant wasn’t just its height — it was how it was built.

At a time when seismic science was still developing, City Hall incorporated advanced structural ideas for its era:

- A steel-frame skeleton designed for flexibility

- Reinforced concrete shear walls for lateral strength

- A tapered tower to reduce wind and seismic stress

- Thick structural cores that distributed force instead of resisting it rigidly

These choices weren’t about aesthetics — they were about survival.

The Earthquake That Changed the Conversation

In 1933, the Long Beach earthquake struck Southern California, causing widespread damage and reshaping building codes statewide.

Los Angeles City Hall survived with minimal structural damage.

This moment quietly reshaped the debate:

- A tall building had endured a major earthquake

- Structural engineering had proven effective

- Height alone was no longer the enemy — poor design was

City Hall didn’t just survive — it validated a new approach to vertical construction in seismic regions.

Proof Without Permission

Despite this success, Los Angeles did not rush to repeal its height limits.

Cultural resistance to density remained strong, and developers continued to build outward rather than upward. But the technical argument had already been settled.

City Hall stood as living proof that:

- High-rise buildings could be engineered safely in Los Angeles

- Earthquakes were a challenge, not a barrier

- Fire, structural, and seismic risks could be managed

The height restriction was no longer about safety — it was about philosophy.

The Ironic Legacy

When Los Angeles finally lifted its height limit in 1957, the city did not suddenly erupt into skyscrapers. The habit of building outward was already deeply entrenched.

The result:

- A skyline that arrived decades late

- Uneven density across the region

- Multiple business centers instead of one core

- Housing and transit challenges baked into the city’s growth pattern

City Hall never triggered a skyscraper boom — but it quietly made one possible.

Why This Still Matters

Today, Los Angeles continues to wrestle with:

- Housing shortages

- Transit-oriented development debates

- Height and zoning battles near rail corridors

- Resistance to density in a growing city

These debates didn’t begin recently.

They trace back to a single contradiction: a city that banned tall buildings — while proving they could be built safely all along.

Los Angeles City Hall wasn’t just a monument.

It was a test case — and it passed.

Further Reading & Sources

- Los Angeles Department of City Planning – History of Urban Planning in LA

- Los Angeles Conservancy – History & Architecture of LA City Hall

- Water and Power Associates – Early Los Angeles Buildings & Height Limits

- USGS – How Buildings Are Designed to Withstand Earthquakes

- Los Angeles Department of Building and Safety – Building Code History

More from The Knowledge Series on STM Daily News

Discover more from Daily News

Subscribe to get the latest posts sent to your email.