Family

The ‘choking game’ and other challenges amplified by social media can come with deadly consequences

Teenagers are increasingly engaging in dangerous games amplified by social media, like the Choking Game and Skullbreaker Challenge, which can have deadly consequences. Parental involvement and healthy risk-taking are essential for prevention and guidance.

Steven Wolterning, Texas A&M University and Paige Williams, Texas A&M University

The “choking game” has potentially deadly consequences, as players are challenged to temporarily strangle themselves by restricting oxygen to the brain. It sounds terrifying, but rough estimates suggest that about 10% of U.S. teenagers may have played this type of game at least once.

There’s more, unfortunately: The Skullbreaker Challenge, the Tide Pod Challenge and Car Surfing are but a few of the deadly games popularized through social media, particularly on Snapchat, Instagram, TikTok, YouTube and X – formerly Twitter. Many of these games go back more than a generation, and some are resurging.

The consequences of these so-called games can be deadly. Skullbreaker Challenge, for example, involves two people kicking the legs out from under a third person, causing them to fall and potentially suffer lasting injuries. Swallowing detergent pods can result in choking and serious illness. A fall from car surfing can lead to severe head trauma.

Coming up with an exact number of adolescent deaths from these activities is difficult. Data is lacking, partly because public health databases do not track these activities well – some deaths may be misclassified as suicides – and partly because much of the existing research is dated.

A 2008 report from the Centers for Disease Control and Prevention found that 82 U.S. children over a 12-year period died after playing the Choking Game. About 87% of the participants were male, most were alone, and their average age was just over 13. Obviously, new, updated research is needed to determine the severity of the problem.

A 2008 report from the Centers for Disease Control and Prevention found that 82 U.S. children over a 12-year period died after playing the Choking Game. About 87% of the participants were male, most were alone, and their average age was just over 13. Obviously, new, updated research is needed to determine the severity of the problem.

Peer pressure and the developing brain

We are a professor of educational neuroscience and a Ph.D. student in educational psychology. Both of us study how children regulate their behaviors and emotions, why teenagers are particularly vulnerable to dangerous games, and how social media amplifies their risks.

Risk-taking is a necessary part of human development, and parents, peers, schools and the broader community play an integral role in guiding and moderating risk-taking. Children are drawn to, and often encouraged to engage in, activities with a degree of social or physical risk, like riding a bike, asking someone for a date or learning how to drive.

Those are healthy risks. They let children explore boundaries and develop risk-management skills. One of those skills is scaffolding. An example of scaffolding is an adult helping a child climb a tree by initially guiding them, then gradually stepping back as the child gains confidence and climbs independently.

Information-gathering is another skill, like asking if swallowing a spoonful of cinnamon is dangerous. A third skill is taking appropriate safety measures – such as surfing with friends rather than going by yourself, or wearing a helmet and having someone nearby when skateboarding.

The perfect storm

During adolescence, the brain is growing and developing in ways that affect maturity, particularly within the circuits responsible for decision-making and emotional regulation. At the same time, hormonal changes increase the drive for reward and social feedback.

All of these biological events are happening as teenagers deal with increasingly complex social relationships while simultaneously trying to gain greater autonomy. The desire for social validation, to impress peers or to attract a potential romantic interest, coupled with less adult supervision, increases the likelihood of participating in risky behaviors. An adolescent might participate in these antics to impress someone they have a crush on, or fit in with others.

That’s why the combination of teenagers and social media can be a perfect storm – and the ideal environment for the proliferation of these dangerous activities.

Social media shapes brain circuits

Social media platforms are driven by algorithms engineered to promote engagement. So they feed you what evokes a strong emotional reaction, and they seem to prioritize sensationalism over safety.

Because teens strongly react to emotional content, they’re more likely to view, like and share videos of these dangerous activities. The problem has become worse as young people spend more time on social media – by some estimates, about five hours a day.

This may be why mood disorders among young people have risen sharply since 2012, about the time when social media became widespread. These mood disorders, like depression and conduct issues, more than double the likelihood of playing dangerous games. It becomes a vicious cycle.

Rather than parents or real-life friends, TikTok, YouTube and other apps and websites are shaping a child’s brain circuits related to risk management. Social media is replacing what was once the community’s role in guiding risk-taking behavior.

Protecting teens while encouraging healthy risk-taking

Monitoring what teens watch on social media is extraordinarily difficult, and adults often are ill-equipped to help. But there are some things parents can do. Unexplained marks on the neck, bloodshot eyes or frequent headaches may indicate involvement in the choking game. Some social media sites, such as YouTube, are sensitive to community feedback and will take down a video that is flagged as dangerous.

As parents keep an eye out for unhealthy risks, they should encourage their children to take healthy ones, such as joining a new social group or participating in outdoor activities. These healthy risks help children learn from mistakes, build resilience and improve risk-management skills. The more they can assess and manage potential dangers, the less likely they will engage in truly unhealthy behaviors.

But many parents have increasingly adopted another route. They shield their children from the healthy challenges the real world presents to them. When that happens, children tend to underestimate more dangerous risks, and they may be more likely to try them.

This issue is systemic, involving schools, government and technology companies alike, each bearing a share of responsibility. However, the dynamic between parents and children also plays a pivotal role. Rather than issuing a unilateral “no” to risk-taking, it’s crucial for parents to engage actively in their children’s healthy risk-taking from an early age.

This helps build a foundation where trust is not assumed but earned, enabling children to feel secure in discussing their experiences and challenges in the digital world, including dangerous activities both online and offline. Such mutual engagement can support the development of a child’s healthy risk assessment skills, providing a robust basis for tackling problems together.

Steven Wolterning, Associate Professor of Educational Psychology, Texas A&M University and Paige Williams, Doctoral student in Educational Psychology, Texas A&M University

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Discover more from Daily News

Subscribe to get the latest posts sent to your email.

Lifestyle

Preparing Students for What’s Next in Work

Preparing Students: Automation, AI and societal economic changes are affecting the workforce and making a significant impact on the employment prospects of future generations. Consider this guidance to put students on the path toward greater earning potential and economic mobility in a rapidly changing economy.

Preparing Students for What’s Next in Work

(Family Features) Automation, AI and societal economic changes are affecting the workforce and making a significant impact on the employment prospects of future generations. More than one-third of today’s college graduates are “underemployed,” meaning they work jobs that don’t require a college degree and may pay less than a living wage, according to data from the Federal Reserve Bank of New York. At the same time, a World Economic Forum report explored how advances in AI are threatening to negatively impact access to entry-level and even mid-level jobs for millions of Americans. Looking ahead, research by Georgetown University indicates that by 2031, 70% of jobs will require education or training beyond high school. However, data from the National Center for Education Statistics indicate only one-third of high school graduates go on to complete a college degree with many of those being in fields that are not in high-earning, high-growth professions. These challenges are not lost on today’s students. In a survey by Junior Achievement and Citizens, 57% of teens reported AI has negatively impacted their career outlook, raising concerns about job replacement and the need for new skills. What’s more, a strong majority (87%) expect to earn extra income through side hustles, gig work or social media content creation. “To put students on the path toward greater earning potential and economic mobility in a rapidly changing economy, students need proactive education and exposure to transferable skills and competencies, such as creative and critical thinking, financial literacy, problem-solving, collaboration and career planning,” said Jack Harris, CEO, Junior Achievement. This assertion is consistent with findings from the Camber Collective. This social impact consulting group identified four key life experiences students can consider and explore that positively affect lifetime earnings, including:- Completing secondary education

- Graduating with a degree in a high-paying field of study

- Receiving mentorship during adolescence

- Obtaining a first full-time job with opportunity for advancement

- Learning opportunities that are designed with the future in mind. For example, learning experiences offered through Junior Achievement reflect the skills and competencies needed to promote economic mobility.

- Internships or apprenticeships that provide hands-on experience and exposure to a career field that can’t be found in a textbook.

- Volunteer or extracurricular roles that develop communication and leadership skills. Virtually every career field requires these soft skills for growth and greater earning potential.

- Relationships that provide insight and connection. Networking with individuals who are already excelling in a chosen field, as well as peers who share similar aspirations, offers perspective from those who are where you wish to be and potentially opens future doors for employment.

- Courses that offer introductory insight into a chosen career path. Local trade or technical schools and other training organizations may even offer certifications that align with a student’s area of interest.

Discover more from Daily News

Subscribe to get the latest posts sent to your email.

News

Children can be systematic problem-solvers at younger ages than psychologists had thought – new research

Child psychologists: Celeste Kidd’s research challenges long-standing ideas from Jean Piaget about children’s problem-solving abilities. Her findings show that children as young as four can independently utilize algorithmic strategies to solve complex tasks, contradicting the belief that systematic logical thinking develops only after age seven. This insight highlights the importance of nurturing algorithmic thinking in early education.

Celeste Kidd, University of California, Berkeley

I’m in a coffee shop when a young child dumps out his mother’s bag in search of fruit snacks. The contents spill onto the table, bench and floor. It’s a chaotic – but functional – solution to the problem.

Children have a penchant for unconventional thinking that, at first glance, can look disordered. This kind of apparently chaotic behavior served as the inspiration for developmental psychologist Jean Piaget’s best-known theory: that children construct their knowledge through experience and must pass through four sequential stages, the first two of which lack the ability to use structured logic.

Piaget remains the GOAT of developmental psychology. He fundamentally and forever changed the world’s view of children by showing that kids do not enter the world with the same conceptual building blocks as adults, but must construct them through experience. No one before or since has amassed such a catalog of quirky child behaviors that researchers even today can replicate within individual children.

While Piaget was certainly correct in observing that children engage in a host of unusual behaviors, my lab recently uncovered evidence that upends some long-standing assumptions about the limits of children’s logical capabilities that originated with his work. Our new paper in the journal Nature Human Behaviour describes how young children are capable of finding systematic solutions to complex problems without any instruction. https://www.youtube.com/embed/Qb4TPj1pxzQ?wmode=transparent&start=0 Jean Piaget describes how children of different ages tackle a sorting task, with varying success.

Putting things in order

Throughout the 1960s, Piaget observed that young children rely on clunky trial-and-error methods rather than systematic strategies when attempting to order objects according to some continuous quantitative dimension, like length. For instance, a 4-year-old child asked to organize sticks from shortest to longest will move them around randomly and usually not achieve the desired final order.

Psychologists have interpreted young children’s inefficient behavior in this kind of ordering task – what we call a seriation task – as an indicator that kids can’t use systematic strategies in problem-solving until at least age 7.

Somewhat counterintuitively, my colleagues and I found that increasing the difficulty and cognitive demands of the seriation task actually prompted young children to discover and use algorithmic solutions to solve it.

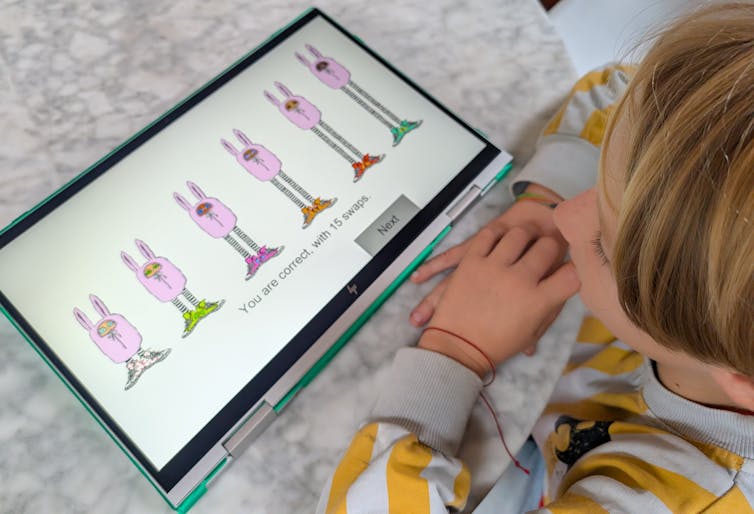

Piaget’s classic study asked children to put some visible items like wooden sticks in order by height. Huiwen Alex Yang, a psychology Ph.D. candidate who works on computational models of learning in my lab, cranked up the difficulty for our version of the task. With advice from our collaborator Bill Thompson, Yang designed a computer game that required children to use feedback clues to infer the height order of items hidden behind a wall, .

The game asked children to order bunnylike creatures from shortest to tallest by clicking on their sneakers to swap their places. The creatures only changed places if they were in the wrong order; otherwise they stayed put. Because they could only see the bunnies’ shoes and not their heights, children had to rely on logical inference rather than direct observation to solve the task. Yang tested 123 children between the ages of 4 and 10. https://www.youtube.com/embed/GlsbcE6nOxk?wmode=transparent&start=0 Researcher Huiwen Alex Yang tests 8-year-old Miro on the bunny sorting task. The bunnies are hidden behind a wall with only their sneakers visible. Miro’s selections exemplify use of selection sort, a classic efficient sorting algorithm from computer science. Kidd Lab at UC Berkeley.

Figuring out a strategy

We found that children independently discovered and applied at least two well-known sorting algorithms. These strategies – called selection sort and shaker sort – are typically studied in computer science.

More than half the children we tested demonstrated evidence of structured algorithmic thinking, and at ages as young as 4 years old. While older kids were more likely to use algorithmic strategies, our finding contrasts with Piaget’s belief that children were incapable of this kind of systematic strategizing before 7 years of age. He thought kids needed to reach what he called the concrete operational stage of development first.

Our results suggest that children are actually capable of spontaneous logical strategy discovery much earlier when circumstances require it. In our task, a trial-and-error strategy could not work because the objects to be ordered were not directly observable; children could not rely on perceptual feedback.

Explaining our results requires a more nuanced interpretation of Piaget’s original data. While children may still favor apparently less logical solutions to problems during the first two Piagetian stages, it’s not because they are incapable of doing otherwise if the situation requires it.

A systematic approach to life

Algorithmic thinking is crucial not only in high-level math classes, but also in everyday life. Imagine that you need to bake two dozen cookies, but your go-to recipe yields only one. You could go through all the steps of making the recipe twice, washing the bowl in between, but you’d never do that because you know that would be inefficient. Instead, you’d double the ingredients and perform each step only once. Algorithmic thinking allows you to identify a systematic way of approaching the need for twice as many cookies that improves the efficiency of your baking.

Algorithmic thinking is an important capacity that’s useful to children as they learn to move and operate in the world – and we now know they have access to these abilities far earlier than psychologists had believed.

That children can engage with algorithmic thinking before formal instruction has important implications for STEM – science, technology, engineering and math –education. Caregivers and educators now need to reconsider when and how they give children the opportunity to tackle more abstract problems and concepts. Knowing that children’s minds are ready for structured problems as early as preschool means we can nurture these abilities earlier in support of stronger math and computational skills.

And have some patience next time you encounter children interacting with the world in ways that are perhaps not super convenient. As you pick up your belongings from a café floor, remember that it’s all part of how children construct their knowledge. Those seemingly chaotic kids are on their way to more obviously logical behavior soon.

Celeste Kidd, Professor of Psychology, University of California, Berkeley

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Dive into “The Knowledge,” where curiosity meets clarity. This playlist, in collaboration with STMDailyNews.com, is designed for viewers who value historical accuracy and insightful learning. Our short videos, ranging from 30 seconds to a minute and a half, make complex subjects easy to grasp in no time. Covering everything from historical events to contemporary processes and entertainment, “The Knowledge” bridges the past with the present. In a world where information is abundant yet often misused, our series aims to guide you through the noise, preserving vital knowledge and truths that shape our lives today. Perfect for curious minds eager to discover the ‘why’ and ‘how’ of everything around us. Subscribe and join in as we explore the facts that matter. https://stmdailynews.com/the-knowledge/

Discover more from Daily News

Subscribe to get the latest posts sent to your email.

Family

Discord Launches Teen-by-Default Settings Globally: What’s Changing (and Why It Matters)

Discord is launching teen-by-default settings globally in early March, adding privacy-forward age assurance, tighter access to age-gated spaces, and new default messaging and content filters.

Discord is rolling out a major shift in how its platform handles teen safety: teen-appropriate settings will become the default experience for all new and existing users worldwide, with age verification required to unlock certain settings and access sensitive or age-gated spaces.

The update is set to begin as a phased global rollout in early March, and Discord says the goal is to strengthen age-appropriate protections while still preserving the privacy, community, and meaningful connection that have made the platform a go-to for gaming and interest-based groups.

Teen-by-default, globally (starting in March)

Discord says the new defaults will apply to all users, not just new signups. In practice, that means accounts will start with a more protective baseline, and verified adults will have more flexibility to adjust settings or access age-restricted content.

Discord is also introducing an age-verification (age assurance) step that may be required to:

- Change certain communication settings

- Access sensitive content

- Enter age-restricted channels, servers, or commands

- Use select message request features

“Nowhere is our safety work more important than when it comes to teen users,” said Savannah Badalich, Head of Product Policy at Discord, adding that the company is building on its existing safety architecture with teen safety principles at the core.

Privacy-forward age assurance: how Discord says it will work

A big part of the announcement is Discord’s attempt to thread the needle between safety and privacy.

Users will be able to choose from multiple methods, including:

- Facial age estimation (video selfie)

- Submitting identification to vendor partners

Discord also says it will implement its age inference model, a background system designed to help determine whether an account belongs to an adult without always requiring users to verify their age. Some users may be asked to use multiple methods if more information is needed to assign an age group.

Discord highlighted several privacy protections in its approach:

- On-device processing: Video selfies for facial age estimation never leave a user’s device.

- Quick deletion: Identity documents submitted to vendor partners are deleted quickly (in most cases, immediately after age confirmation).

- Straightforward verification: In most cases, users complete the process once and their Discord experience adapts to their verified age group.

- Private status: A user’s age verification status cannot be seen by other users.

After completing a chosen method, Discord says users will receive confirmation via a direct message from Discord’s official account. A user’s assigned age group can also be viewed in My Account settings, and users can appeal by retrying the process.

Discord also notes it prompts users to age-assure only within Discord and currently does not send emails or text messages about its age assurance process or results.

What’s changing in the default safety settings

Starting in early March, Discord says it will assign new default settings designed to support age-appropriate experiences while keeping privacy front and center. Highlights include:

- Content filters: Users must be age-assured as adults to unblur sensitive content or turn the setting off.

- Age-gated spaces: Only age-assured adults can access age-restricted channels, servers, and app commands.

- Message Request Inbox: DMs from people a user may not know are routed to a separate inbox by default; only age-assured adults can modify this setting.

- Friend request alerts: People will receive warning prompts for friend requests from users they may not know.

- Stage restrictions: Only age-assured adults may speak on stage in servers.

Discord notes it previously launched a teen-by-default experience in the UK and Australia last year, and says this global rollout builds on that approach to deliver consistent protections worldwide.

Giving teens a seat at the table: Discord Teen Council

Along with the safety updates, Discord also announced recruitment for its inaugural Discord Teen Council, a teen advisory body intended to bring authentic teen perspectives into how Discord shapes their experience.

Discord says the Teen Council will consist of 10–12 teens and will help inform future product features, policies, and educational resources.

- Who can apply: Teens ages 13–17

- Apply by: May 1, 2026

The bigger picture

Discord says these updates build on its broader safety ecosystem, including tools and resources such as Family Center, Teen Safety Assist, a Warning System, and more.

Whether you’re a parent, a teen user, or an adult who uses Discord for gaming communities and group chats, the headline is simple: the default experience is becoming more restrictive, and adult access will increasingly depend on age assurance.

Source: PRNewswire

Dive into “The Knowledge,” where curiosity meets clarity. This playlist, in collaboration with STMDailyNews.com, is designed for viewers who value historical accuracy and insightful learning. Our short videos, ranging from 30 seconds to a minute and a half, make complex subjects easy to grasp in no time. Covering everything from historical events to contemporary processes and entertainment, “The Knowledge” bridges the past with the present. In a world where information is abundant yet often misused, our series aims to guide you through the noise, preserving vital knowledge and truths that shape our lives today. Perfect for curious minds eager to discover the ‘why’ and ‘how’ of everything around us. Subscribe and join in as we explore the facts that matter. https://stmdailynews.com/the-knowledge/

Discover more from Daily News

Subscribe to get the latest posts sent to your email.